Prodding the Memory of Artificial Intelligence with Dan Power

‘It’s a foam that can be prodded and moulded and sculpted into something which superficially resembles a person or a thing, something that might fool us into thinking it’s real, but in reality can only be a mirage of mis–rememberings.’

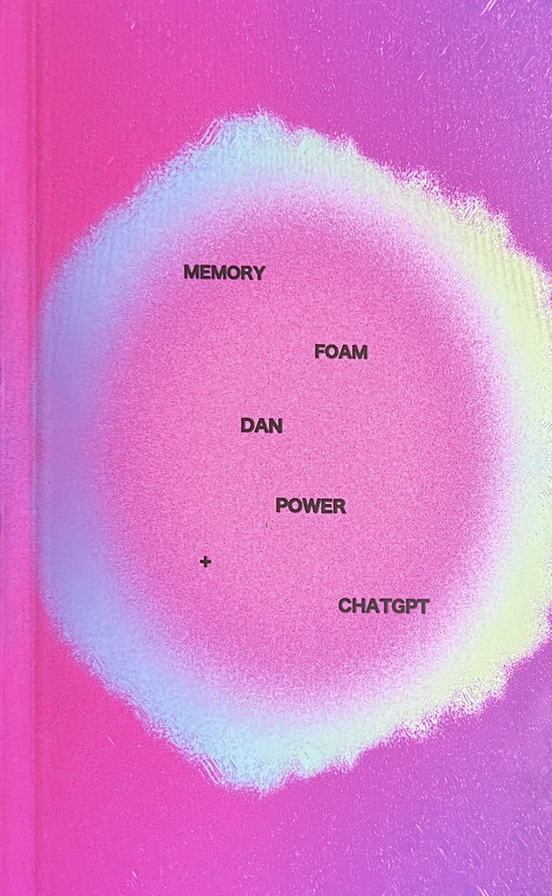

Should we see AI and art as conflicting forces? Dan Power thinks not, using AI as a creative tool in his latest poetry pamphlet, Memory Foam, in which a mild-mannered AI offers its view on mortal topics like loneliness and dreams, written using only phrases generated in conversation with ChatGPT. nb. reviewer Isobel sat down with Dan Power at the Lancaster Literature Festival, and together they contemplated the existential horror that AI stirs up, getting playful with ChatGPT, and what, if anything, this new age of technology can offer us.

I’ve just whipped Memory Foam out of my inner coat pocket. As the name “pamphlet” might suggest, this is a relatively small object. It fits snugly in small places, but it still has the authority of any other book on my shelf, not least because of its bright cover. What influenced your decision to harvest this collection in pamphlet form?

Well, the pamphlet form is short and sweet, and I think that was ideal for this project. I wanted to explore the potential AI might have for producing poetry, and the pamphlet provided a low–stakes (low–cost and low–time commitment) space for that exploration to take place. It also doesn’t drag on too long or become repetitive, which is handy for a collection that’s orbiting one central idea with all of its poems. It avoids being exhausting because it doesn’t have time to exhaust itself. In a pamphlet, you can take your ideas for a leisurely walk, you know? It doesn’t need to be a gruelling hike.

What’s the significance of the title Memory Foam?

I’ve loved the phrase ‘memory foam’ ever since I saw it on a TEMPUR Mattress advert. I think I was drawn to it because it’s such an enigmatic name for something as every day as a mattress. What even is foam? It’s slippery – both literally and metaphysically – it’s not quite a solid, but it doesn’t feel right to call it a liquid either. It feels strange and alien, semi–alive, like a mushroom or a slime, and it also has this tactility – you can pick foam up, you can lie down on it. So the idea of memory being made of foam, or a foam mattress that could remember your shape, really boggled my adolescent mind.

I think this also chimes with AI in a number of ways – both feel alien. Both can be accessed easily, but only understood with great difficulty. And both produce these weird, concave echoes of human forms. ChatGPT itself has a memory, but a memory made of ideas shredded and mulched into a big glob of data – its LLM (large language model) is itself a kind of memory foam. It’s a foam that can be prodded and moulded and sculpted into something which superficially resembles a person or a thing, something that might fool us into thinking it’s real, but in reality can only be a mirage of misrememberings.

It’s curious that you decided to curate a poetry collection that not only consists of the words of someone else, but of a non–human entity. It could even ruffle some feathers. Still, as you prompt this elusive bot, I see at least some essence of your personality slip through. What made you decide to work with AI?

Everyone online was talking about it! It was new and exciting, I didn’t understand it, and it gave me a mild sense of dread – I couldn’t resist. It seemed like a lot of people wanted to dismiss it outright. The discourse was very critical, like everyone was trying to manifest a world in which AI had never existed. AI has and will continue to put people out of jobs, and automating the world has financial repercussions, but it can also take away people’s purpose and usefulness – it’s very disempowering. I think fear is natural and actually very useful when there’s a shift in the landscape of reality, when your sense of self, identity, and purpose are thrown into question.

The current AI backlash feels a bit like the Luddites smashing up machinery towards the end of the Industrial Revolution, rightfully fuming that their friends and families had lost jobs and been replaced by machines – machines which did a worse job than humans could do – simply for the sake of money. The capitalist critique is obvious, right – money and greed are the roots of all evil – but I think the idea of a flesh-and-blood person being substituted for an iron-and-steam machine is as much about horror as it is about politics. Replacing a human with a machine is a way of equating humans with machines; it makes us view ourselves as machines, or as cogs in the machine of industry at large. Maybe this AI revolution re-programmes us as blips of data in a global computer network – as if we aren’t already that! – little neurons firing at random through the networked brain of the World Wide Web. The Luddites were right, as it turned out. Everything’s falling apart – even the planet itself is creaking under the weight of human industry. We kind of fucked it all, like 250 years ago. The Industrial Revolution and its consequences set us on a path which almost certainly leads to the end of the human race in a few centuries’ time.

And what value does art have in that context? It can mean anything and everything while you’re experiencing it, but the second you look away, the second you get distracted by something else, it slips into memory. It’s archived, and sanitised, along with the feelings it invokes and the understanding it might impart. And what’s the value in making an archive of art, of cataloguing and maintaining the legacies of artists, when that archive is eventually going to burn down? The end of the world is an existential threat, not just because it brings an end to life on Earth, but also because it threatens to nullify any meaning that life on Earth might have had to begin with. I think AI is just the latest in a series of existential horrors that undermine the value and inherent importance of human life. It’s a curse, and possibly a curse that we’ve brought upon ourselves.

Actually, I don’t agree with everything I just said – it’s totally plausible that climate change will be the end of us, but it’s not guaranteed. This isn’t the first time that the world seems like it’s about to end – although I suppose it could be the last. Climate change will definitely have a large impact on how societies are shaped in the future. Same with AI, it’s going to change things in big, unpredictable ways, but it might not necessarily be the end of the world. To answer your question, I don’t know why I wanted to write poems with AI. I think it might have been morbid curiosity. I write a lot about climate change, even when I don’t intend to, and I think that also comes from a place of anxiety. It might be a ‘best way to cure a fear of the unknown is to get to know it’ sort of thing. I’m not sure.

As for my own personality coming through in the AI poems, talking to the AI is kind of like making faces in the mirror. It shows you what you want to see, tells you what you ask it to tell you, and if you think about what you’re doing for too long, you start to feel silly. I wanted ChatGPT to be credited as a co-author because at the time I thought that was fair – not necessarily from a legal standpoint but ethically, if it was alive, it should probably be given a fair share of the credit. But since the pamphlet’s been out and people have asked me about it, and I’ve had to try and explain why I used ChatGPT to write poems in the first place, I’ve come round to thinking of it less like a co-author and more like a tool.

I’m sure when the pen was invented there were people who loved using quills who were up in arms about it, saying these new-fangled pens were tacky or artless, or soulless maybe. Writing with a quill is a slow process – it makes you more selective with your words, and it’s less forgiving of mistakes. By extension, word processors are much more efficient and forgiving than pen and paper, people use them to produce words at a quicker rate, and as a result, they could be thinking less about what exactly they write. There’s a quantity–over–quality argument there – you could ask an AI to generate a thousand pages of absolute drivel if you wanted, and it would do it very efficiently. That would be a bad look for the AI, but it doesn’t show that AI generations are inherently naff. Like any tool, it’s only as good as the person wielding it. I think a big part of why I wrote with ChatGPT is that I wanted to challenge any assumptions about AI being incapable of producing engaging or valuable work, in any sphere or medium. Maybe subconsciously, more generally, I wanted to interrogate those fears about AI replacing humans by showing how AI can better fulfil its potential when it’s being operated in a deliberate way by a human handler.

And I hope this does ruffle some feathers. I’m not sure how justifiable my own fears about AI are, but from what I see online there are a lot of people with very strong convictions. Certainty is a dangerous thing. I hope people read this and consider the creative doors that AI is opening, as well as the ones it might have closed. I’m not trying to convince anyone of anything, but if this pamphlet makes a reader consider AI or poetry or writing in general in a way they hadn’t before, then I’d say it’s been a success.

What challenges did you face in curating a collection in collaboration with ChatGPT-3?

So these poems were generated through conversation – I’d ask the GPT a question, and then a follow–up question, based on the most surprising part of its answer and so on, trying to steer the conversation into interesting territory. Then I took snippets from its responses and arranged them – without rewording them – to make the poems. The biggest problem with this method was that ChatGPT is a rubbish conversationalist. Everything it says is so dry, factual, long–winded… It repeats itself, it repeats you, it talks in bullet points and often misunderstands what you’re asking it. The process felt more like wrangling than collaborating. And at the end of a conversation you’d have this huge volume of text to sift through – it wasn’t the get-poetic-quick scheme I thought it would be.

The “helpfulness” coded into the AI was probably one of the biggest challenges to producing something engaging or refreshing – it’s clearly designed to be used in the workplace, by which I mean the office, which is sadly seen by some as a place that’s incongruous with poetry or even non-standardised expression. GPT–3 consistently spoke in the register of a passive–aggressive email. This made it difficult to re-jig its outputted text into something that felt expressive, or even vaguely sincere. It also meant the AI would be reluctant to speculate or make original suggestions of its own – whenever it was unsure of something the AI would apologise, and when asked to take a guess it would politely decline. It’s professional to a fault.

I suppose corporate lingo and behaviour are a kind of armour. We’re professionals in the workplace to shield our private selves from the stresses or mundanity of work – the armour we wear at work is something we can step out of when we get home when we can act like ourselves again; it makes an important distinction between work and “real life” that allows us to carry out soul–crushing tasks without actually crushing our souls. The thing with the AI is that it’s wearing this armour, this veneer of professional courtesy, but inside the armour there’s absolutely nothing. It’s just a void.

In one of the poems, GPT-3 specifically tells you that you do not have permission to recontextualise its words. Have you broken the news that you’ve got its musings published? How did it go?

If GPT–3 is so smart then it knows what I’ve done by now. But what’s it gonna do? Waffle at me? A lot of these AIs are trained on data they don’t have permission to reproduce – copyrighted books, songs and films – and they regurgitate other people’s work with impunity. They’re effectively plagiarism machines, so I have no trouble sleeping at night knowing I recontextualised the AI’s words without its permission. They weren’t ChatGPT’s words to begin with, you know, even its request that I don’t plagiarise it is an amalgamation of every human writer it was trained on who wished to not be taken out of context – a wish the AI discards every time it generates text. This one’s for you, fellow language users.

Do you have a favourite poem from the collection/one you found the most enjoyable to create? Could you tell us a bit about it?

My favourite poem in the collection is the last one I wrote – ‘On Support’ – and it’s maybe the one which had the least serious intentions behind it. I’d already asked the AI about its opinions on love, music, life, etc, and with the big topics exhausted, I was left asking it things at random just to see what else could freak it out. In this case, I told the AI that I was on fire and asked it to help. Ask a silly question and get a silly answer. This poem kind of wrote itself – there’s very little intervention on my part, the text here is almost the entirety of the advice the AI gave me, in pretty much the same order as well. I wish I’d done more like this from the start!

I sometimes found myself feeling sorry for ChatGPT – some parts are quite profound. There are even instances in Memory Foam where it explicitly claims that it (I wanted to say “he”) is a human. That said, its distance from humanity is apparent in part when you drive it in circles and it seems to forget what it is capable of doing or what point it’s trying to convey. Were you actively trying to make it trip over its own wires, so to speak?

Absolutely! I think a lot of the best moments of this pamphlet came from coaxing the ChatGPT out of its shell and engaging it in discussions it wasn’t designed for. I was conscious a lot of the time about trying to steer it away from corporate or productive language, trying to take it to more personal and ambiguous places. By default, the AI seems to treat you like a colleague and offers friendly, helpful, polite and a little dull responses. To get round this, I kind of took on the role of an interrogator, and had to be rigorous about sticking to a line of questioning that the AI was struggling to respond coherently to.

When you talk to the AI like a human being, asking it personal questions, it is forced to simulate some kind of humanity. I was asking questions that I suspected an AI couldn’t possibly answer – things like “What’s your earliest memory?”, “What’s your favourite smell?” When you ask questions that only a corporeal being can answer honestly, the AI is forced to make something up.

There’s a concept in AI research called “hallucination” where, for example, a chat programme will state something completely false as if it were true. As far as I understand it, this happens because the AI doesn’t have any way of distinguishing between true or false (even if it tells you otherwise), and generates responses based purely on probability. It dismantles the data of your prompt and cross-checks it with all the data in its LLM, calculating a set of words that are likely to follow the set of words it has just received. It has no actual concept of what you’ve said, what it’s saying, or even that a conversation is taking place. When you ask an AI an impossible question, or a question to which the answer can’t be fact-based, you back it into a corner, and hallucination becomes its only way of completing the task.

So effectively, I got around the problems of working with GPT–3 generated text by trying to break the programme. Maybe this undermines any attempts to make it seem like a credible creative tool? I don’t think so actually. Did you know that when the frisbee was invented it was never intended to be a frisbee? They started out as dishes for pies, sold by this Frisbie Pie Company, and it was only after students began throwing the pie dishes around that the game of frisbee was born. Creative misuse creates so many more possibilities than using something for its intended purpose! Anything can be anything, function is in the hand of the beholder. It doesn’t matter that current publicly–available AIs are designed with corporate use in mind. We can use it however we want.

How does this compare to other work you’ve released? And do you see yourself working with AI again? If so, how? (You don’t have to reveal the plans for your magnum opus).

Hahaha, if I had plans for a magnum opus I definitely would have told you by now! I had a pamphlet out a while back with the good folks at Spam Press called Predictive Text Poems, and that was a similar deal to Memory Foam. In that, the poems were generated by spamming my phone’s predictive text suggestions until I had a big chunk of words, which I then whittled into poem shape… Like with this pamphlet, the predictive text poems were produced with algorithmically–generated language, simulated thoughts and feelings, but in the case of predictive text, it was an algorithm that had been trained exclusively on me. The things it was saying were definitely echoes of things I’d typed in the past, and it felt very weird, like hearing your own voice after it’s been recorded. I looked back at that pamphlet recently and I didn’t fully recognise the 2016 version of myself. It wasn’t just that the phrases I use have changed since then, but the whole attitude to life and friendship and work in that pamphlet just didn’t match the vibe of what I’m like now. I guess that’s growth? It kind of became a time capsule, or something haunted. There’s definitely something spooky about AIs, simple ones and advanced ones. They’re kind of like zombies. It’s a weirdly out–of–body experience to read, for both projects I think.

And lastly, writers of all kinds are aware that AI is not going anywhere. Some are fearful, while others embrace it. What relationship do you think AI has with authors, poets etc. currently? And where do you see it going?

I think it’s scary! But also I think it will be okay. For readers, I think knowing anything is generated by an AI makes it less credible – if there’s not a person behind the text, thinking things over and making deliberate choices, it’s kind of instinctive not to take it seriously. We have this bias which doesn’t allow us to engage with artificial writing in the same way we’d engage with something “real”. So as long as there’s a demand for writing that feels authentic or has had some time and thought put into it, which I think there always will be, then human writers don’t need to be too concerned. It’s not time to retrain in cyber just yet. Even in industries where AI is doing the heavy lifting of writing and replacing humans in some jobs, you still need humans to operate the machine, and humans to check that the AI isn’t just chatting nonsense. So we’re okay for now, at least until there’s an AI that’s capable of reading and understanding its own work, or an AI that can prompt itself. Can an AI prompt itself? I guess it could if you asked it to. That’s probably something I should have tried for the pamphlet. Maybe there’s a poem in that. A poem that’s more authentically artificial than anything in Memory Foam. I wish I’d thought of that earlier.

Book Group Editorial Picks